DNS is one of the critical services necessary for proper operation of the Internet. Also it is often a target of various cyber attacks. Considering this fact, operators of authoritative DNS servers require robust solutions offering enough performance for regular DNS traffic and resisting possible attacks against this service. That is the reason why we focus, besides other aspects, on the performance during development of our authoritative DNS server Knot DNS. Benchmarking is an inseparable part of the project and we have been exploring various ways of further performance growth. Recently we had a great opportunity to play with the epic 128-thread processor AMD EPYC 7702P. In this blog post I will share some results from its benchmarking.

Test environment

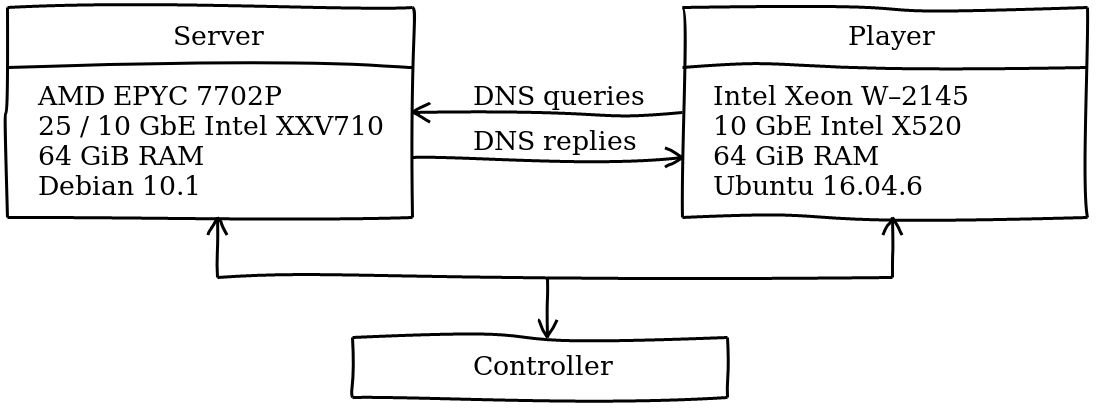

The basic setup for authoritative nameserver benchmarking consists of two physical servers directly interconnected using suitable network cards. So far we have been focusing on 10Gb Ethernet, as it is most commonly used. During the benchmarking one server (player) is sending pre-generated or previously captured DNS queries at a specified rate to the testing server (server), where the DNS nameserver software is running. Both sent queries and received replies are counted and usually the objective is to find the maximum query rate at which the nameserver is able to respond all the queries. Optionally, the benchmarking can be automated by another server (controller). More information about our regular benchmarking can be found at https://www.knot-dns.cz/benchmark/. Let’s introduce the hardware by this diagram:

Episode UDP

UDP is still the dominant transport protocol used for DNS. It is simple, fast, but less reliable and secure. In order to send plenty of DNS queries over UDP from one player, we have to bypass the network stack in the operating system using the framework Netmap in combination with the Tcpreplay utilities. Unfortunately, we had some issues with newer Linux kernels, so we use Linux 4.4 (Ubuntu 16.04). Because of the older kernel, we chose a bit older but proven Ethernet card Intel X520. An obvious consequence is that the server’s card Intel XXV710 had to be set to 10GbE. We thought it doesn’t matter, but… 😉 We hit the limits!

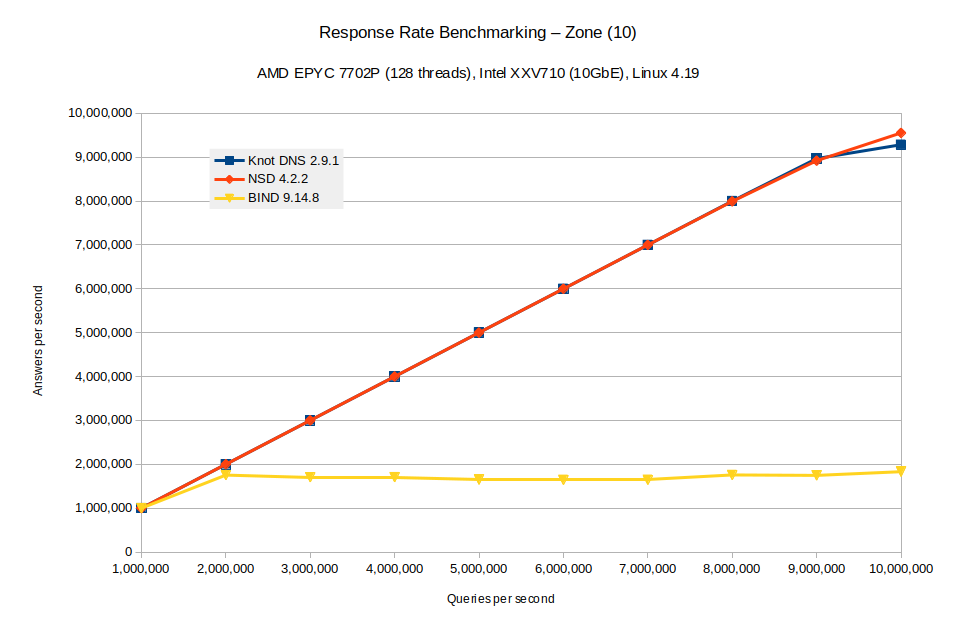

The chart above depicts the results for random queries to a tiny zone without DNSSEC. It is the simplest case with the highest response rate (see https://www.knot-dns.cz/benchmark/ for detailed dataset description). We were unable to send more queries than 10M per second. It was the maximum for tcpreplay, which is a single-thread utility.

The number of nameserver threads/processes was set to 128. Although the server’s network card offered up to 128 IO queues, the driver/kernel detected 119 only. Fortunately, the obligatory rule “the number of queues should be the same as the number of threads” wasn’t right in this case. The maximum performance observed was with 48 queues configured. Don’t ask me why.

We also tried to test the latest BIND 9.15.6, but we came across some issues with high number of UDP workers. Another observation relates to NSD. When it was unable to send more responses because of saturated network, it emitted plenty of logs, which could negatively affect the system stability. This issue was already fixed. Thanks Wouter!

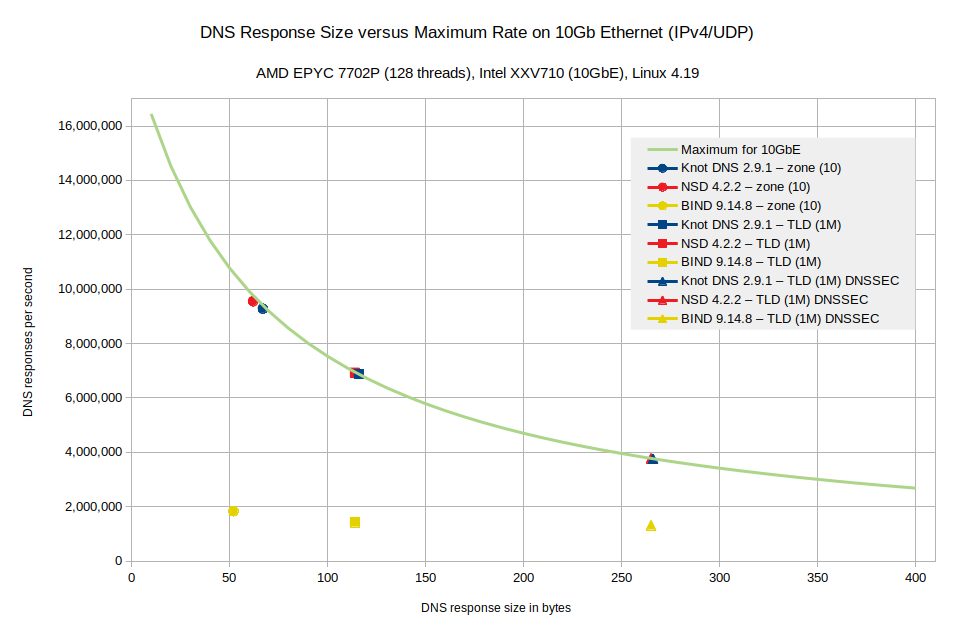

For all tested datasets the 10Gb Ethernet was the major bottleneck. The following chart shows the relation between DNS response size and maximum rate of such responses. In addition to that, some maximum values reached for several datasets are marked on the chart. Notice that each nameserver has a slightly different average response size mostly due to diverse implementation of the domain name compression.

Episode TCP

TCP is a less frequently used transport protocol for DNS but necessary for its proper operation. There are various tools for TCP benchmarking. Unfortunately, none of them offers enough performance for us. So CZ.NIC in cooperation with Brno University of Technology has been developing another tool dpdk-tcp-generator, which can satisfy our needs. It can fetch a PCAP file with pregenerated UDP traffic, randomize source addresses and ports, and replay it as TCP traffic at very high speed. For each query a new TCP connection is established.

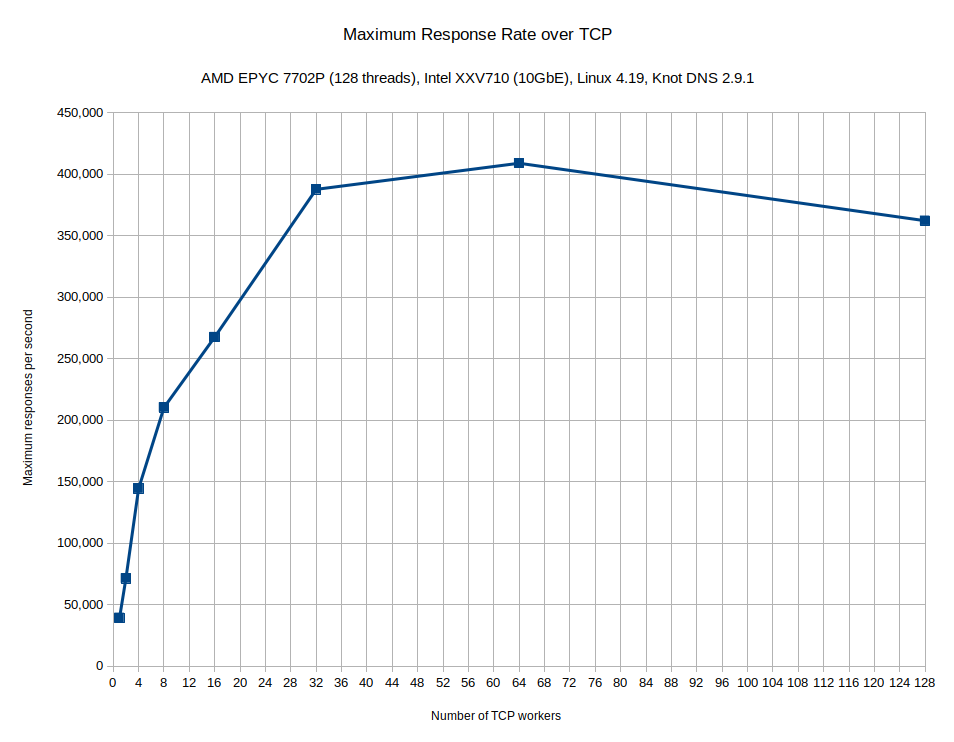

Finding an optimal TCP configuration is a new territory for us and the results for all the tested nameservers were not somehow exciting, so we focused on Knot DNS only. Knot DNS is a multi-threaded implementation. Since version 2.9.0 the SO_REUSEPORT socket option can be enabled for TCP workers. This option helps but still look at this chart:

It is a terrible result, isn’t it? So we used a profiler. Next you can see there is massive lock contention in the Linux kernel when accepting and closing a TCP connection.

It is a terrible result, isn’t it? So we used a profiler. Next you can see there is massive lock contention in the Linux kernel when accepting and closing a TCP connection.

Currently we have no idea how to overcome this bottleneck. What about you? Just for the completeness, the limit on TCP client count was set to 900k.

Conclusion

New AMD EPYC processors together with the right software allow demanding DNS operators to build really high-performance authoritative DNS servers. However, there are challenges in front of the Knot DNS developers which have to be solved. First, we have to upgrade our testing infrastructure and tooling so we can further explore the performance limits. Any sponsorship is welcome 😀 Secondly, the lock contention when handling TCP connections must be mitigated, which is probably work for Linux kernel hackers.

Acknowledgement

Finally, I would like to thank Tomáš Soucha and Jan Petrák from Abacus Electric, Alexey Nechuyatov and Krzysztof Luka from AMD, and Alexander Lyamin from Qrator Labs for this opportunity, provided hardware, and perfect support.